Linear Mixed Effects Models

BIOL709/BSCI339

Chick Weights

Load some chick weight growth experiment data

data("ChickWeight")There are several variables here, Four treatements (1-4), a bunch of chicks (48) and weight measurements over 20 days of feeding. For something like this, ggplot is pretty handy:

require(ggplot2)

ggplot(ChickWeight, aes(x = Time, y = weight, col=Chick))+geom_line ()+ geom_point() + facet_wrap(~Diet)

Clearly, there is variability among individuals, and among treatments, in the slope of growth.

Some linear models

Here’s a linear model with main and interaction effects of time and diet: \[Y_{ij} = (\beta + \gamma_j) T_i + \epsilon_{ij}\]

Note that in this formulation, intercept is 0, but the slope has a mean (\(\beta\)) and a diet specific effect (\(\gamma_j\))

Chick.lm <- lm(weight ~ Time:Diet - 1, data = ChickWeight)

summary(Chick.lm)$coef## Estimate Std. Error t value Pr(>|t|)

## Time:Diet1 8.929575 0.2010880 44.40631 8.087458e-188

## Time:Diet2 10.502625 0.2640756 39.77128 4.507463e-167

## Time:Diet3 12.629732 0.2640756 47.82620 2.201654e-202

## Time:Diet4 11.774316 0.2698661 43.63022 1.996372e-184Note that the “-1” coerced all of the intercepts to 0. Also, all the effects are significant: Weight increases with Time on average at rate 6.85 g/day, some diets lead to a higher overall mean.

A quiz … what’s the difference between the following models? Which one matches the model above

Chick.lm1 <- lm(weight ~ Time * Diet, data = ChickWeight)

Chick.lm2 <- lm(weight ~ Time + Diet, data = ChickWeight)

Chick.lm3 <- lm(weight ~ Time : Diet, data = ChickWeight)Visualizing the regression lines (with ggplot)

ggplot(ChickWeight, aes(x = Time, y = weight, col=factor(Chick)))+geom_line ()+ geom_point() + facet_wrap(~Diet) +

geom_line(data = cbind(ChickWeight, y.hat = predict(Chick.lm)), aes(x = Time, y = y.hat), col="darkred", lwd = 1.5) + theme(legend.position="none")

We will be plotting this kind of prediction plot alot, so let’s make a function that makes it quicker. It will be function of a model fit:

plotFit <- function(ChickModel, ...)

ggplot(ChickWeight, aes(x = Time, y = weight, col=factor(Chick))) +

geom_point(alpha = 0.5) + facet_wrap(~Diet) +

geom_line(data = cbind(ChickWeight, y.hat = predict(ChickModel)), aes(x = Time, y = y.hat), ...) + theme(legend.position="none")

plotFit(Chick.lm, lwd=1.5, col="darkred")

anova(Chick.lm)## Analysis of Variance Table

##

## Response: weight

## Df Sum Sq Mean Sq F value Pr(>F)

## Time 1 2042344 2042344 1759.757 < 2.2e-16 ***

## Diet 3 129876 43292 37.302 < 2.2e-16 ***

## Time:Diet 3 80804 26935 23.208 3.474e-14 ***

## Residuals 570 661532 1161

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1ANOVA tells us everything is significant … but we need to check diagnostic plots.

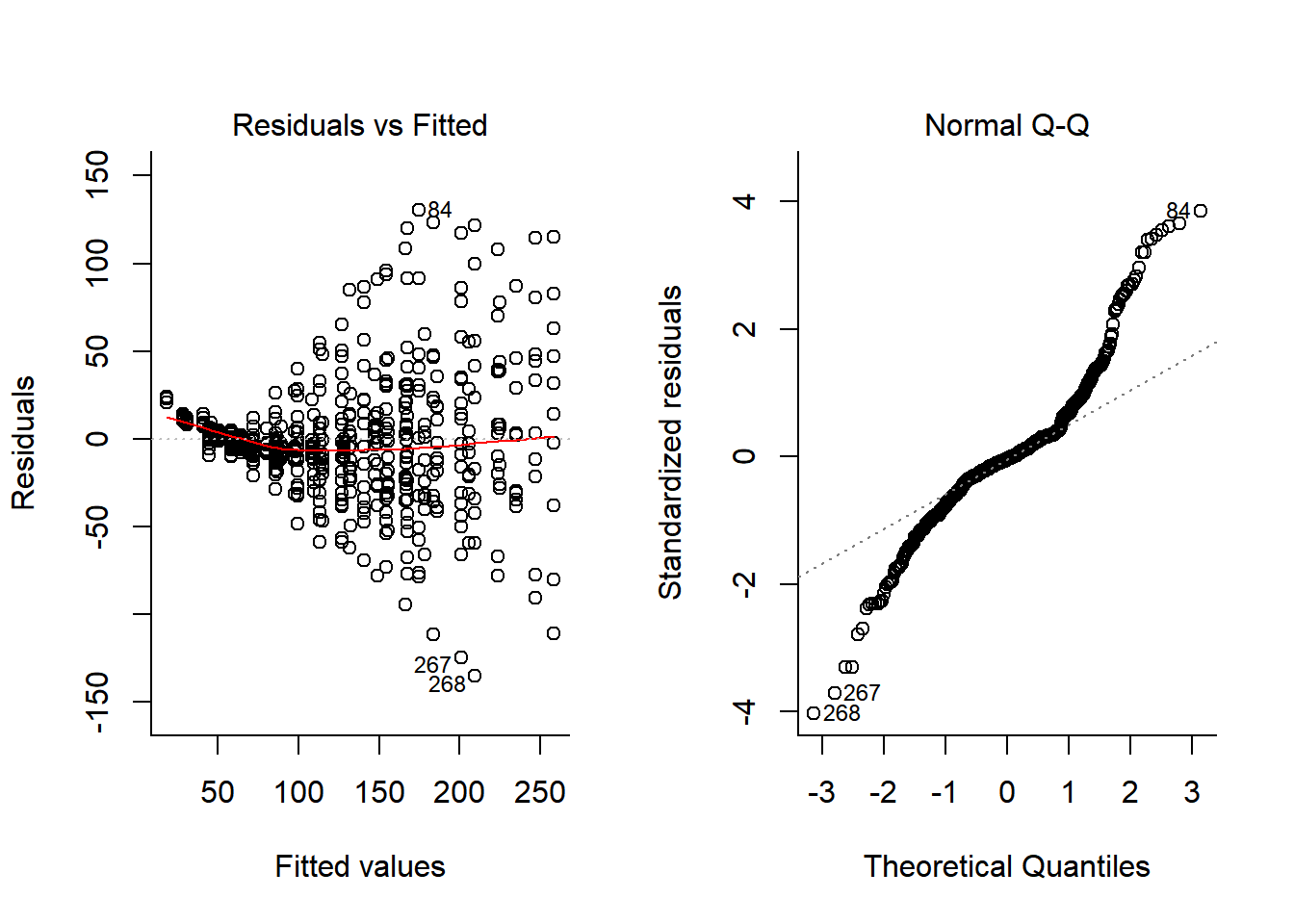

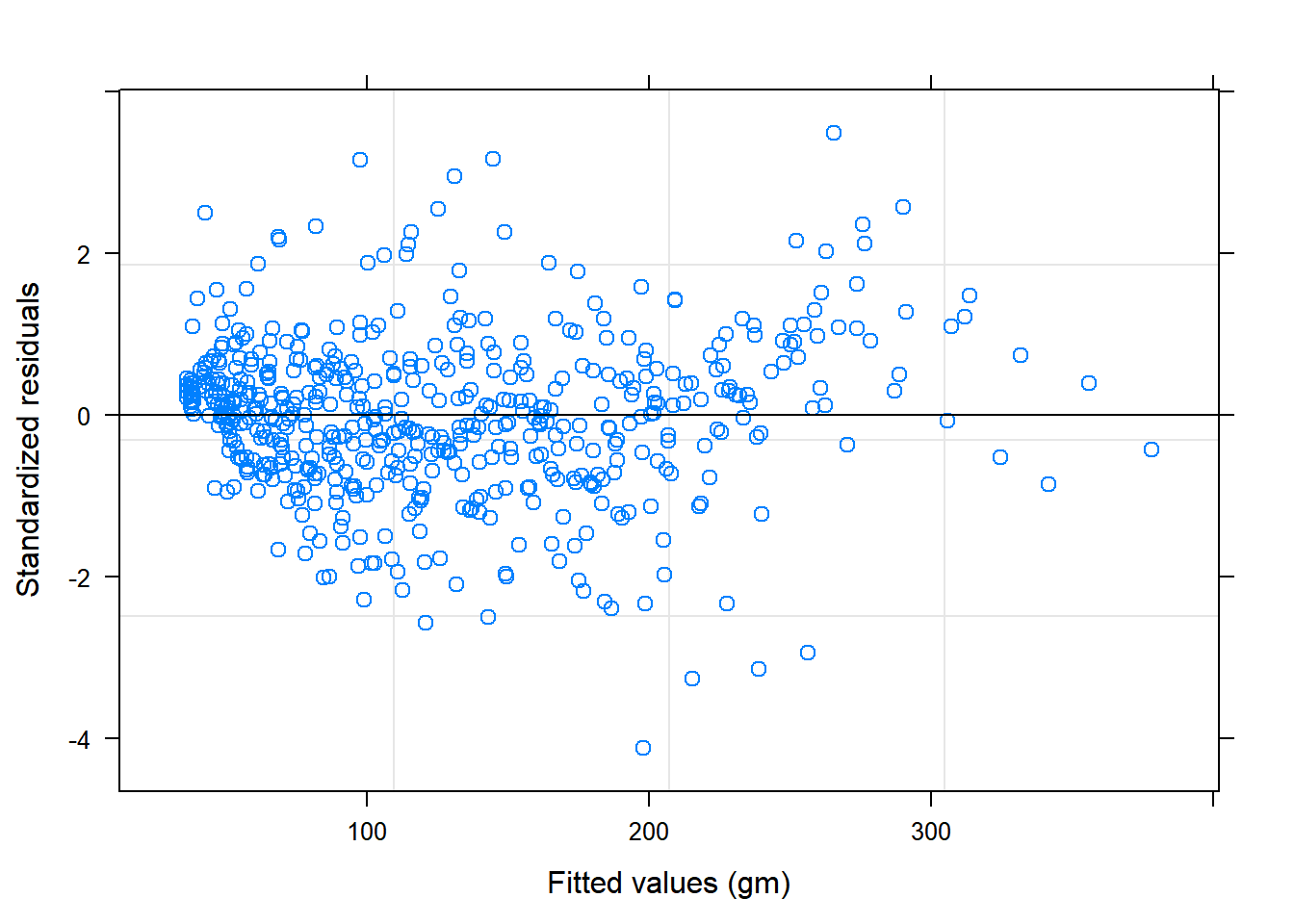

plot(Chick.lm, 1:2)

Both heteroskedasticity and kurtosis in the residuals are big problems! A lot of that is because each individual bird is quite different for the others.

One option to deal with this is to include individual bird in the model:

Chick.lm2 <- lm(weight ~ Time : Diet + Chick, data = ChickWeight)

anova(Chick.lm2)## Analysis of Variance Table

##

## Response: weight

## Df Sum Sq Mean Sq F value Pr(>F)

## Chick 49 530105 10818 16.806 < 2.2e-16 ***

## Time:Diet 4 2047136 511784 795.029 < 2.2e-16 ***

## Residuals 524 337315 644

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1plotFit(Chick.lm2)

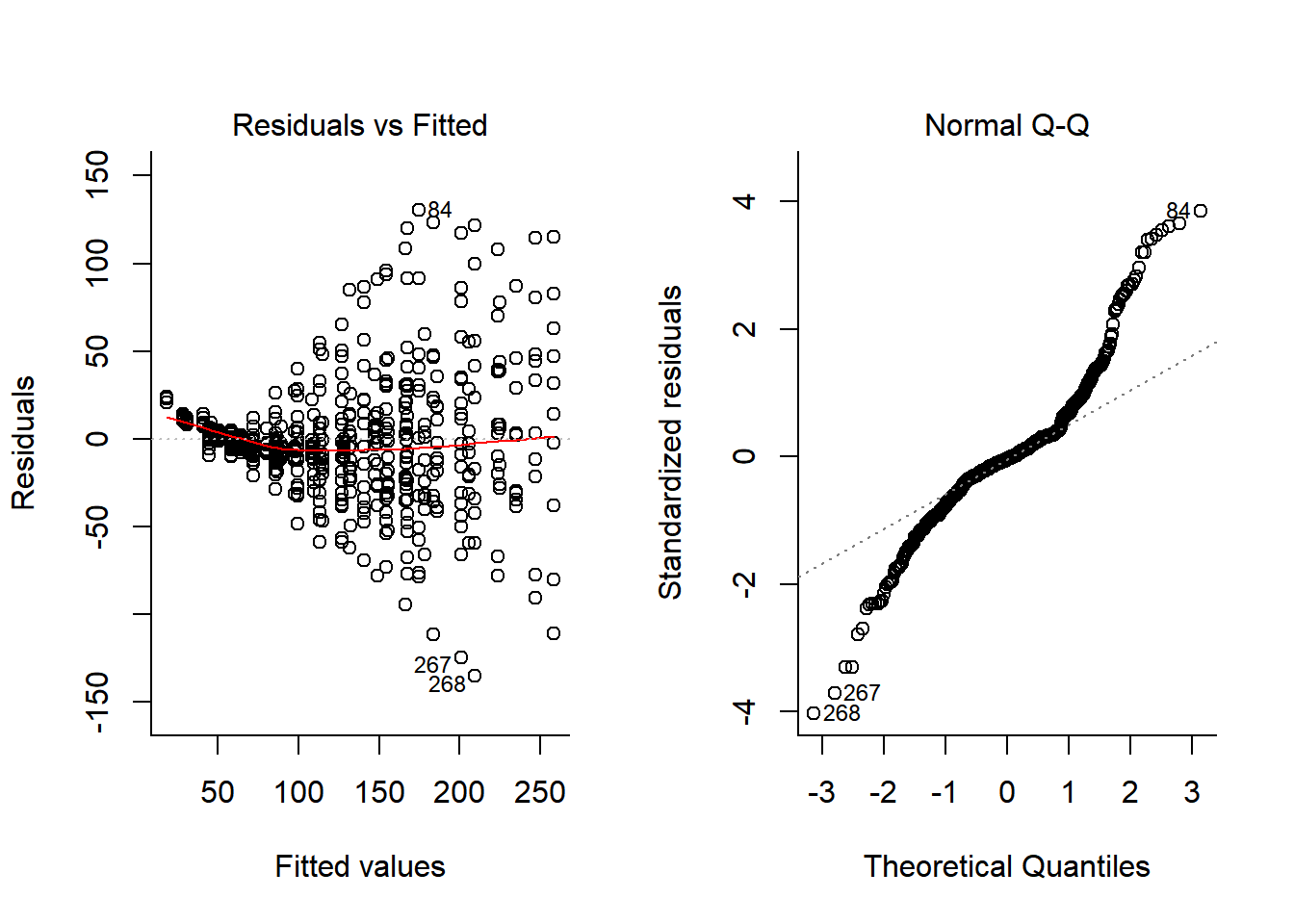

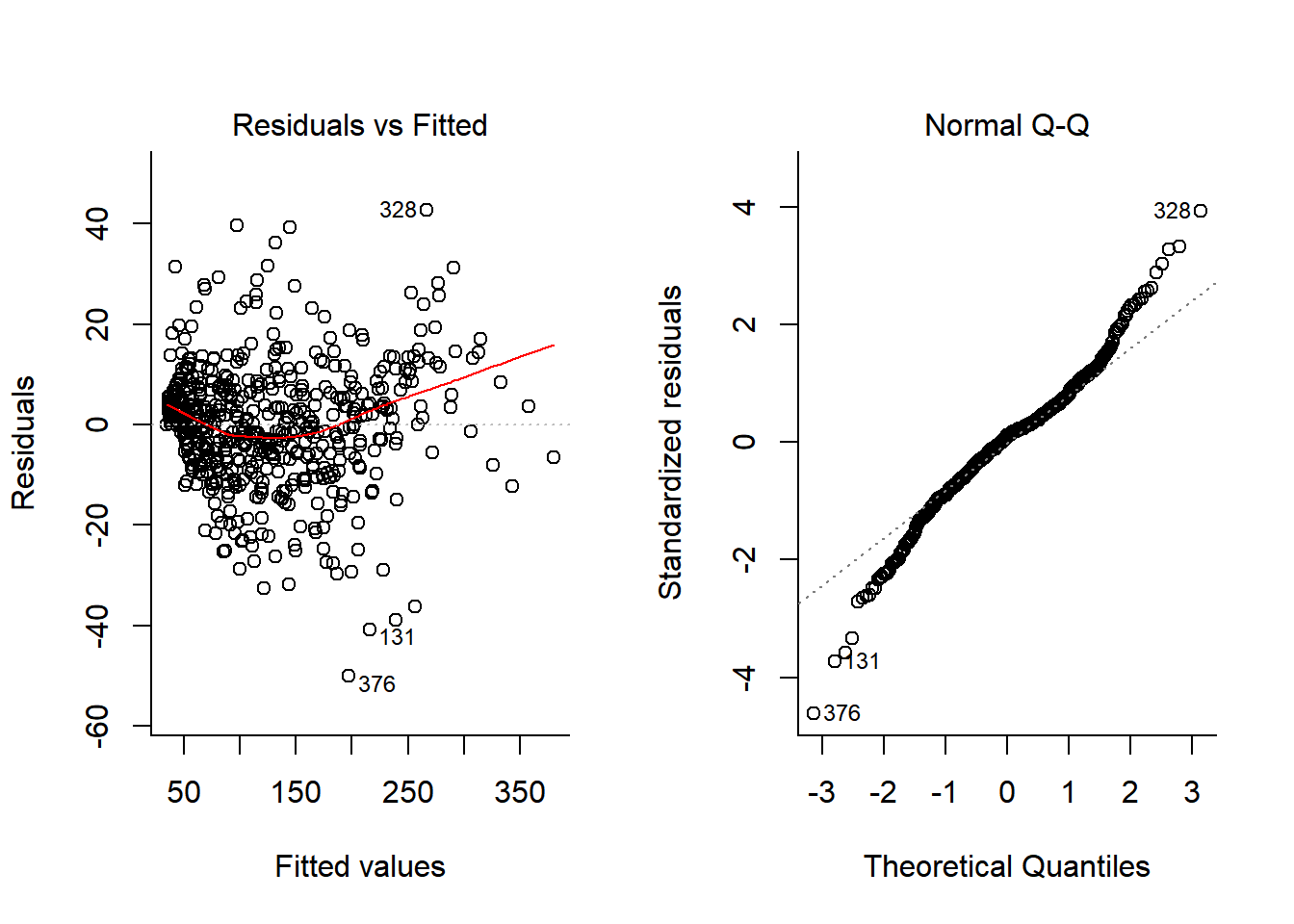

plot(Chick.lm, 1:2)

We’ve absorbed a lot of the variability, but there’s still strong structure to the residuals. Somewhat better would be to let each individual have a unique slope as well. For fun, I also added a second order polynomial term to the model:

Chick.lm3 <- lm(weight ~ (Diet * poly(Time,2)) + Time : (Chick-1), data = ChickWeight)

anova(Chick.lm3)## Analysis of Variance Table

##

## Response: weight

## Df Sum Sq Mean Sq F value Pr(>F)

## Diet 4 8733214 2183303 13985.650 < 2.2e-16 ***

## poly(Time, 2) 2 2038109 1019055 6527.788 < 2.2e-16 ***

## Diet:poly(Time, 2) 6 84264 14044 89.962 < 2.2e-16 ***

## Time:Chick 46 555143 12068 77.306 < 2.2e-16 ***

## Residuals 520 81177 156

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1plotFit(Chick.lm3)

What about now:

plot(Chick.lm3, 1:2)

Well - this is now a much better model (With a terrific R^2 (summary(Chick.lm3)$r.squared = 0.9929361) and decent looking residuals. The big problem, however, is that this has cost us ENORMOUS degrees of freedom! We’ve estimated length(Chick.lm3$coef) = 62 coefficients.

Quick comment on “updating”

Before we fix this problem, a quick comment on a “smarter” workflow for iteratively building models using the update function. This function basically takes your model and adds or removes features as desired.

For example, the Chick.lm1 model above was given by:

Chick.lm1$call## lm(formula = weight ~ Time * Diet, data = ChickWeight)Whereas:

Chick.lm2$call## lm(formula = weight ~ Time:Diet + Chick, data = ChickWeight)Chick.lm3$call## lm(formula = weight ~ (Diet * poly(Time, 2)) + Time:(Chick -

## 1), data = ChickWeight)You can generate the second model by updating the first one:

Chick.lm2b <- update(Chick.lm1, . ~ . - 1 - Time - Diet + Chick)

Chick.lm2b$call## lm(formula = weight ~ Chick + Time:Diet - 1, data = ChickWeight)As typical in R formula notation, the ‘.’ means whatever was there, the minuses mean remove the variable, and plus means add the variable.

Generate the third model by updating the second one:

Chick.lm3b <- update(Chick.lm2b, . ~ . + poly(Time, 2))The update command lets you add additional structural elements, like correlations and random effects (as below), change the response variable, or change the data - without rewriting the entire model call.

Mixed effects models

The model fitted above looks something like this: \[Y_{ijk} = \alpha_i + (\beta_i + \kappa_j) T_{ijk} + \gamma_j T^2_{ijk} + \epsilon_{ij}\]

Where \(i \in \{1,2,3,4\}\) refers to diet, \(j \in {1,2, ..., 48}\) refers to individual chicken (each of which has a unique slope - but not intercept) and \(k\) to replicates.

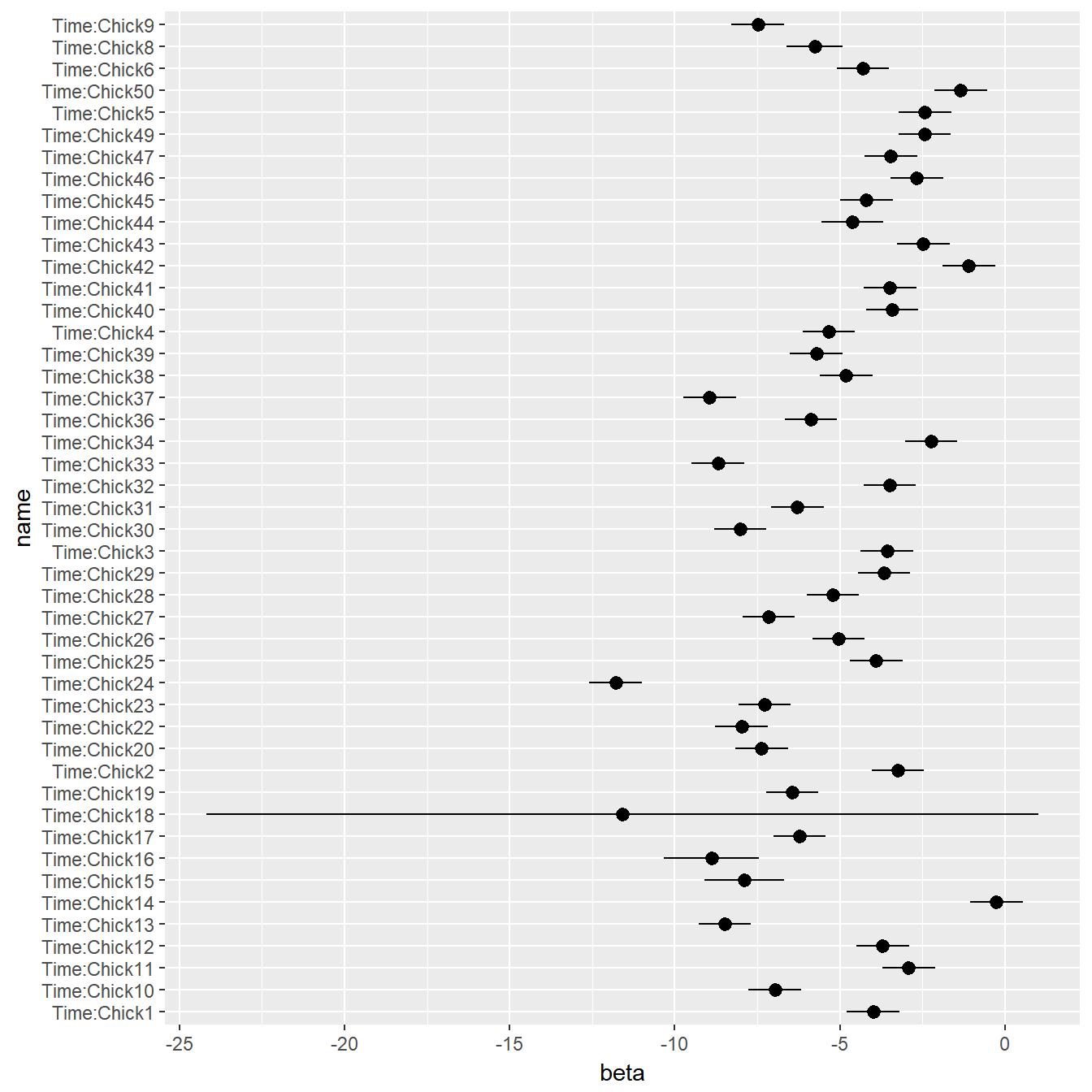

In fact, we don’t ACTUALLY care about individual birds! Wouldn’t it be great if we could take all of those \(\kappa_j\)’s and model them as a random variable? I.e.: \(\kappa_j \sim {\cal N}(\mu_\kappa, \sigma_\kappa)\)? Out of curiosity, lets look at all of the coefficients for the individual chicks:

require(plyr) # for mutate

betas <- data.frame(summary(Chick.lm3)$coef)

betas <- mutate(betas, name = row.names(betas),

beta = Estimate, lo = beta - 2*Std..Error, hi = beta + 2*Std..Error)

betas <- subset(betas, grepl("Chick", name))

ggplot(betas, aes(y=beta, x=name, ymin=lo, ymax=hi)) + geom_pointrange() + coord_flip()

That is exactly what a mixed effects model does! It’s called “mixed” because there are “fixed effects” (here, the diet, and the time are fixed effects, and the individual chick will be “random”).

Mixed effect notation in general

Formula:

\[{\bf y}_i = {\bf X}_i\beta + Z_i{\bf b}_i + \epsilon_i; \,\,\, i = 1, ..., M\] \[{\bf b}_i = {\cal N}(0, \Phi); \,\,\, \epsilon_i \sim {\cal N}(0, \sigma^2 {\bf I})\]

where \(\beta\) is the (p-dimensional) vector of fixed effects \({\bf b}_i\) is the q-dimensional vector of random effects, \({\bf X}_i\) (\(n \times p\)) is a the known fixed effect regressor matrix, and \({\bf Z}_i\) (\(n \times q\)) is the random effects data matrix.

Applied to the chicks

The nlme package allows you to fit mixed effects models. So does lme4 - which is in some ways faster and more modern, but does NOT model heteroskedasticity or (!spoiler alert!) autocorrelation.

Let’s try a model that looks just like our best model above, but rather than have a unique Time slope for each chick, it will be a random variable. Thus: \[Y_{ijk} = \alpha_i + (\beta_i + K) T_{ijk} + \gamma_i T^2_{ijk} + \epsilon_{ij}\]

Where the slope on Time is given by the random parameter K, which has some distribution: \(K \sim {\cal N}(0, \sigma_k^2)\)

require(nlme)

Chick.lme1 <- lme(fixed = weight ~ Diet * poly(Time,2),

random = ~ (Time-1)|Chick, data = ChickWeight, method = "ML")The summary output of this function is massive … below a somewhat truncated version:

## summary(Chick.lme1)## Linear mixed-effects model fit by maximum likelihood

## Data: ChickWeight

## AIC BIC logLik

## 4789.38 4850.414 -2380.69

##

## Random effects:

## Formula: ~(Time - 1) | Chick

## Time Residual

## StdDev: 2.46184 12.3999

##

## Fixed effects: weight ~ Diet * poly(Time, 2)

## Value Std.Error DF t-value p-value

## (Intercept) 101.2062 6.15721 520 16.437042 0.0000

## Diet2 19.6553 10.50328 46 1.871350 0.0677

## Diet3 39.3727 10.50328 46 3.748609 0.0005

## Diet4 33.0455 10.50499 46 3.145699 0.0029

## poly(Time, 2)1 1033.6519 95.04407 520 10.875501 0.0000

## poly(Time, 2)2 70.4572 20.65100 520 3.411808 0.0007

## Diet2:poly(Time, 2)1 360.4877 161.54458 520 2.231506 0.0261

## Diet3:poly(Time, 2)1 812.8318 161.54458 520 5.031626 0.0000

## Diet4:poly(Time, 2)1 519.1017 161.67971 520 3.210679 0.0014

## Diet2:poly(Time, 2)2 52.6077 34.38622 520 1.529907 0.1266

## Diet3:poly(Time, 2)2 209.4174 34.38622 520 6.090154 0.0000

## Diet4:poly(Time, 2)2 -6.3517 34.92972 520 -0.181842 0.8558The fixed effects estimates should be similar as in the linear model, but here we also have a standard deviation (2.46) around the time slopes.

Plotting Mixed-Effects fits and diagnostics

Plot the fit identically as above:

plotFit(Chick.lme1)

There is a complicated set of plotting functions for lme objects. For example, you can just plot the residuals against fitted values:

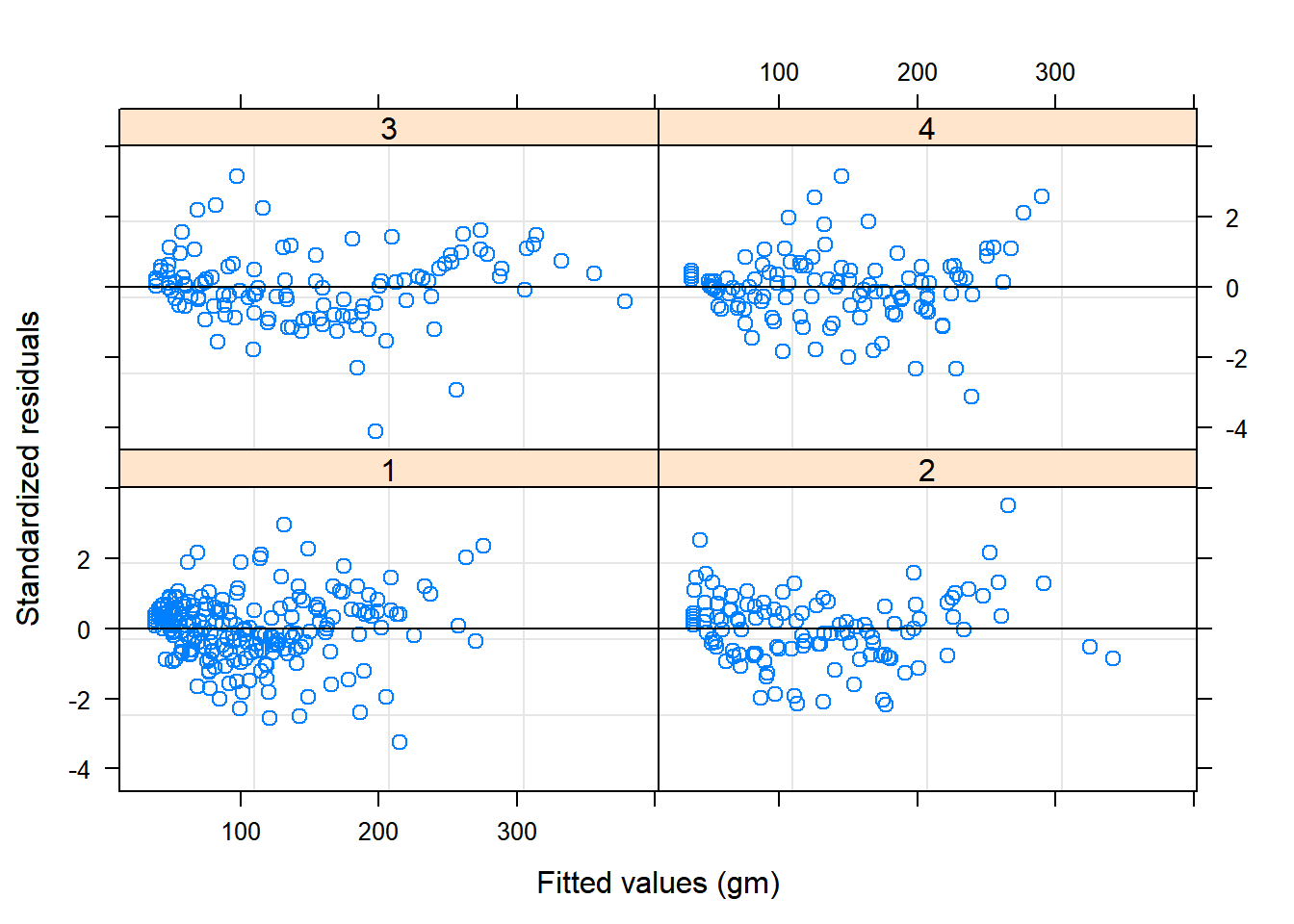

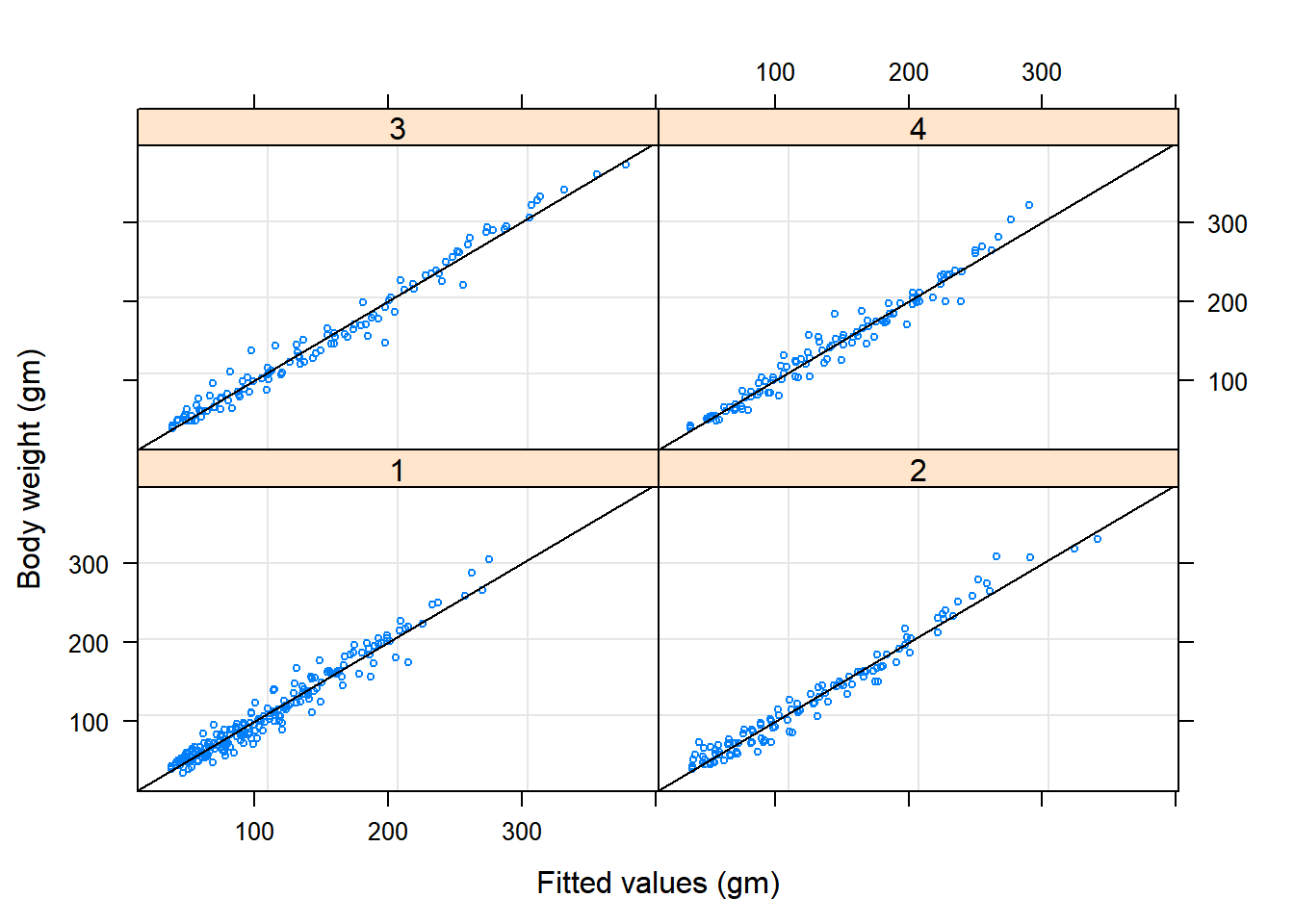

plot(Chick.lme1)

plot(Chick.lme1, resid(., type = "p") ~ fitted(.) | Diet, abline = 0) Or observed weights versus fitted weights by Diet:

Or observed weights versus fitted weights by Diet:

plot(Chick.lme1, weight ~ fitted(.) | Diet, abline = c(0,1), cex=0.5)

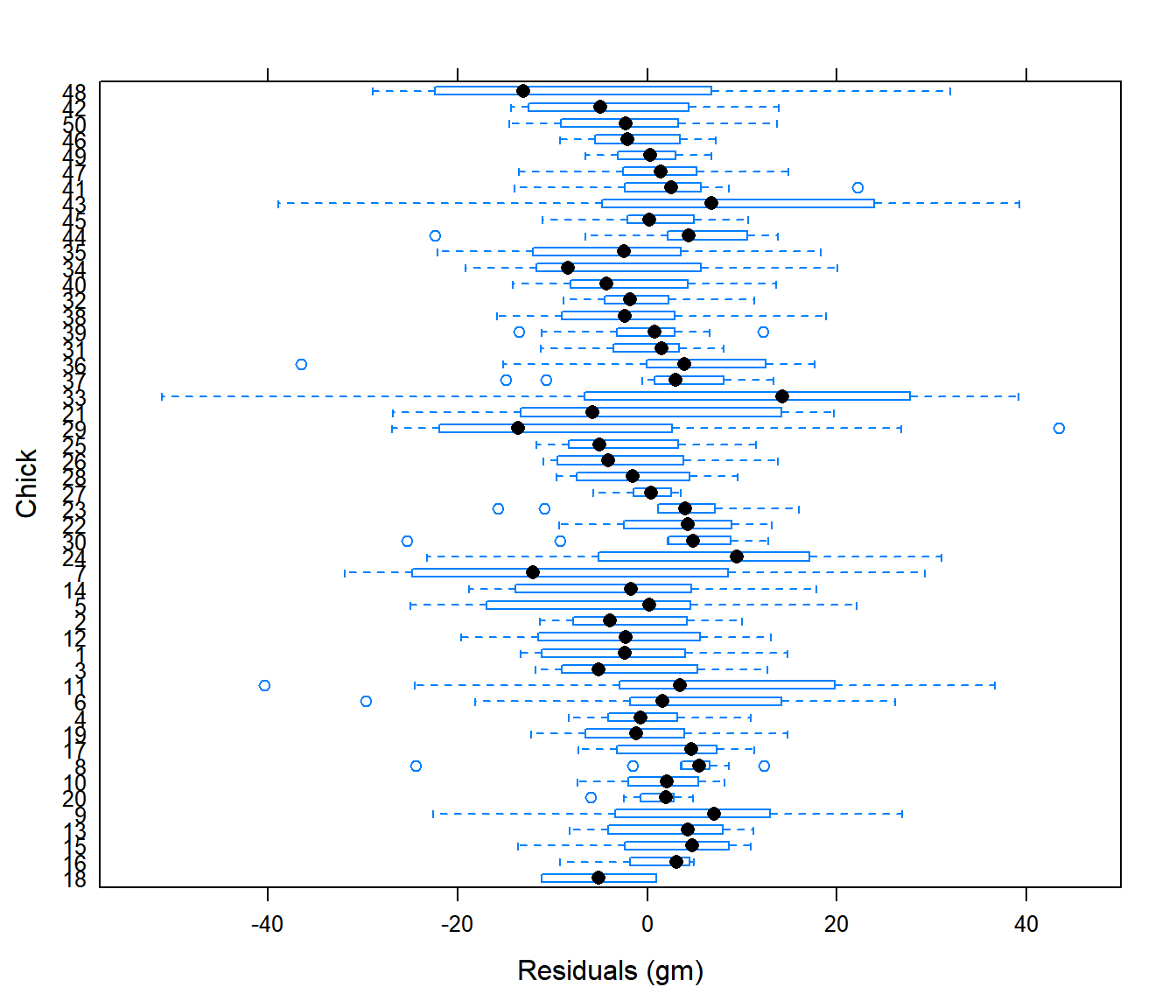

Or - just the residuals of each chick:

plot(Chick.lme1, Chick ~ resid(.))

AUTOCORRELATION WARNING!

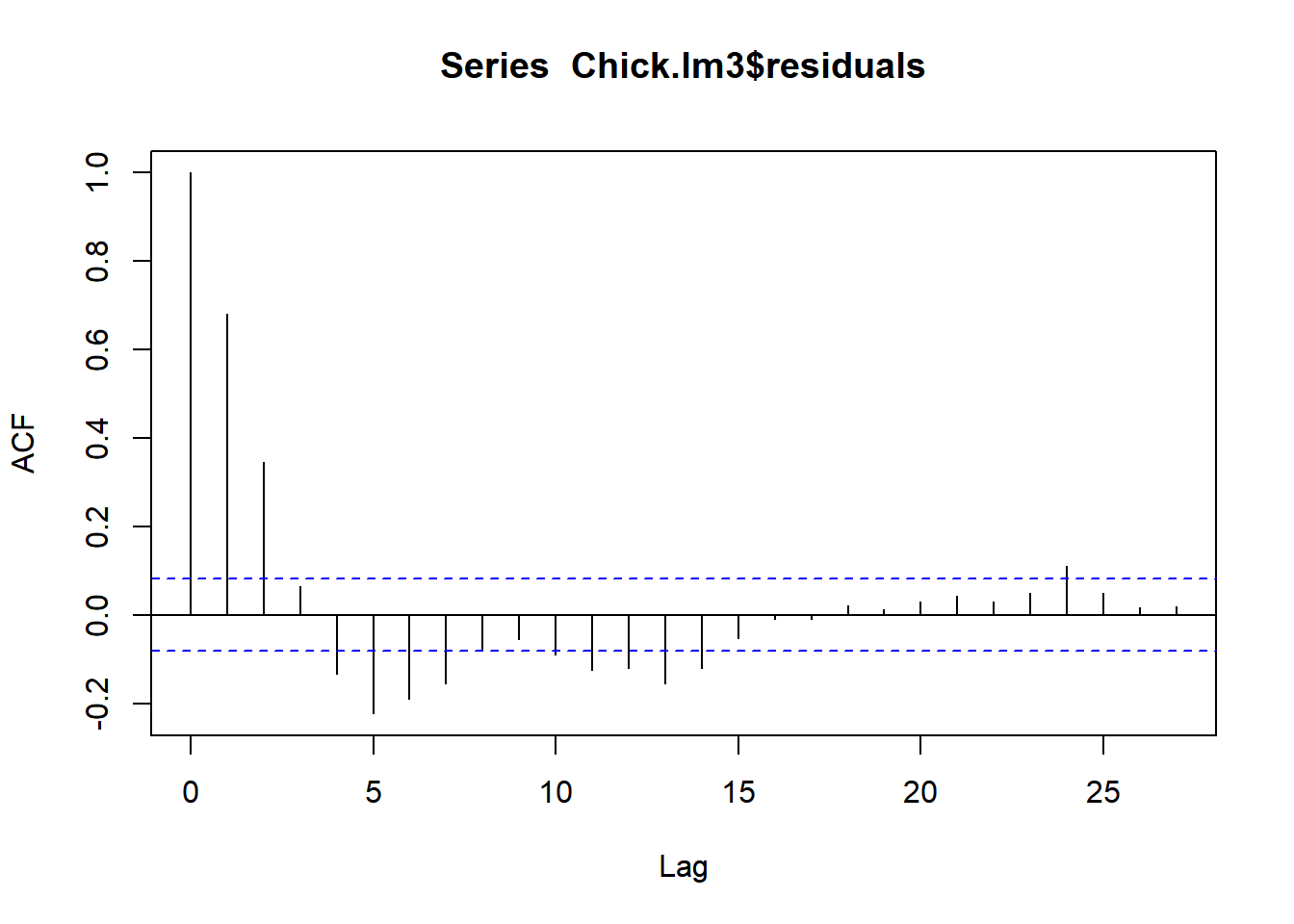

acf(Chick.lm3$residuals)

Oh no!

LME with serial autocorrelation

The following model adds 1st order autocorrelation to a 2nd order time model with a unique diet effect:

Chick.lme2 <- lme(fixed = weight ~ Diet + poly(Time,2)-1,

random = ~ (Time-1)|Chick, data = ChickWeight,

correlation = corAR1())Notice that the random grouping term is Time - 1 | Chick, i.e. the slopes for each chick is unique and modeled as a random variable, but the intercepts are always 0. Furthermore the intercept of the main effect variable is also set to 0 (via the -1). This makes sense biologically, but it is also the only way I could make a model with a serial autocorrelation converge.

This is - anyway - an excellent model. The summary:

## summary(Chick.lme2)## Linear mixed-effects model fit by REML

## Data: ChickWeight

## AIC BIC logLik

## 4208.346 4247.488 -2095.173

##

## Random effects:

## Formula: ~(Time - 1) | Chick

## Time Residual

## StdDev: 3.291071 15.73268

##

## Correlation Structure: AR(1)

## Formula: ~1 | Chick

## Parameter estimate(s):

## Phi

## 0.869144

## Fixed effects: weight ~ Diet + poly(Time, 2) - 1

## Value Std.Error DF t-value p-value

## Diet1 119.1190 5.93250 46 20.079049 0

## Diet2 118.7396 6.83475 46 17.372931 0

## Diet3 116.6206 6.83475 46 17.062892 0

## Diet4 120.8724 6.83473 46 17.685023 0

## poly(Time, 2)1 1327.5352 79.13447 527 16.775690 0

## poly(Time, 2)2 128.1690 14.11702 527 9.079045 0reveals the additional estimate of the serial autocorrelation coefficient: 0.87.

So - is diet a significant covariate?

This is a “simple” question that is not, actually, that instant to answer, since it is difficult to interpret the output of the summary.

The best solution is just to use AIC, comparing a model with and without diet (and, say, with and without autocorrelation), e.g.:

Chick.lme <- lme.formula(fixed = weight ~ Diet * poly(Time, 2), data = ChickWeight, random = ~(Time - 1) | Chick, method = "ML")

Chick.lmes <- list(ChickModel.Diet = Chick.lme,

ChickModel.NoDiet = update(Chick.lme, fixed = .~.-Diet:poly(Time,2)),

ChickModel.Diet.AutoCorr = update(Chick.lme, correlation = corAR1()),

ChickModel.NoDiet.AutoCorr = update(Chick.lme, fixed = .~.-Diet:poly(Time,2), correlation = corAR1()))

ldply(Chick.lmes, AIC)## .id V1

## 1 ChickModel.Diet 4789.380

## 2 ChickModel.NoDiet 4842.816

## 3 ChickModel.Diet.AutoCorr 4206.544

## 4 ChickModel.NoDiet.AutoCorr 4245.153If this “AIC table” is unsatisfactory, there’s a nice function called aictab in the package AICcmodavg:

require(AICcmodavg)

aictab(Chick.lmes)##

## Model selection based on AICc:

##

## K AICc Delta_AICc AICcWt Cum.Wt LL

## ChickModel.Diet.AutoCorr 15 4207.40 0.00 1 1 -2088.27

## ChickModel.NoDiet.AutoCorr 9 4245.47 38.07 0 1 -2113.58

## ChickModel.Diet 14 4790.13 582.73 0 1 -2380.69

## ChickModel.NoDiet 8 4843.07 635.67 0 1 -2413.41This table - known sometimes as a “delta AIC” table - sorts the models by increasing information criterion, and provides useful ancillary summaries. These tables are often reported in journal articles. Note that it automatically chose the “AICc” as a criterion based on sample sizes and degress of freedom considerations. But the ultimate conclusion is the same.

Exercise: Does Diet Matter?

- Summarize the conclusion of this analysis.

- How many parameters are estimated in the “best” model? Report all of their values.

- Expand the model selection to include models without the second order Diet term. Are any of those “better”.

- Expand the model selection to include corresponding linear models, with individual chick fixed effects. How do they compare?