Many blind users benefit from technologies to support their movement through unfamiliar indoor or outdoor environments, including their navigation to destinations and their awareness of the surroundings.

Personal Object Recognizer

We show that blind people can train a mobile application with only a few snapshots of their objects of interest (e.g. everyday items on their home). We use transfer learning to adapt a deep learning network trained for generic image recognition for user-specific recognition tasks given a few examples. Our approach yielded accuracies over 90% for some blind participants, outperforming models trained by sighted participants.

Clustering User Interactions in Outdoor Orientation Apps

We analyze the behavior of people who are blind, during their interaction with mobile technologies that support orientation and mobility. By representing a single blind user of a mobile navigation application as a stream of remotely collected interaction logs, we uncover underlying behavioral patterns and understand application usage. We propose a scalable solution for identifying naturally formed clusters from common interaction patterns of thousands of blind users and extracting semantic meanings for the clusters.

Automatic synthesis of understandable American Sign Language (ASL) animations can increase information accessibility for many people who are deaf. In ASL, facial expressions are grammatically required and essential to the meaning of sentences. Their automatic synthesis is challenging since it requires temporal coordination of the face, synchronization of movements with words in linguistically diverse sentences, and blending between facial expressions.

NSF Award: 1506786

Collaboration with Boston University (C. Neidle) and Rutgers University (D. Metaxas).

Syntactic Facial Expression Synthesis

We propose a novel framework where time series of MPEG-4 face and head movements, automatically extracted from an ASL annotated sign language corpus, drive the motion of a 3D signing avatar. To avoid idiosyncratic aspects of a single performance, we use probabilistic generative models for learning a latent trace from multiple recordings of different sentences. Both metric-based evaluations and user studies with deaf users indicate that this approach is more effective than prior state-of-the-art approaches for producing sign language animations with facial expressions.

User Evaluation of Linguistic Technology

To estimate perceptual losses in our facial expression models, we conduct rigorous methodological research to understand how experimental design choices affect the outcome of studies.

- We release stimuli engineered to evaluate ASL facial expressions and demonstrated the critical importance of involving signers early in the stimuli design process.

- We measure the effect of different baselines and impact of participants’ demographics and technology experience in evaluation studies.

- We employ eye-tracking with ASL signers to automatically and unobtrusively detect the quality of ASL animations being viewed.

- The high cost and time needed for user studies increases the importance of developing automatic metrics to benchmark progress; so, we develop a novel, sign-language specific similarity-scoring algorithm for facial expression synthesis, which is significantly correlated with judgments of ASL signers.

We assess and improve the usability of Tigres, a Python library that enables scientists to quickly develop and test their analysis workflows on laptops and desktops and then subsequently move them into production HPC resources and large data volumes. Initial results from empirical user data reveal Tigres’ strengths, limitations, new features to be prioritized, as well as insights on methodological aspects of user evaluation that can advance the relatively new research field of API usability.

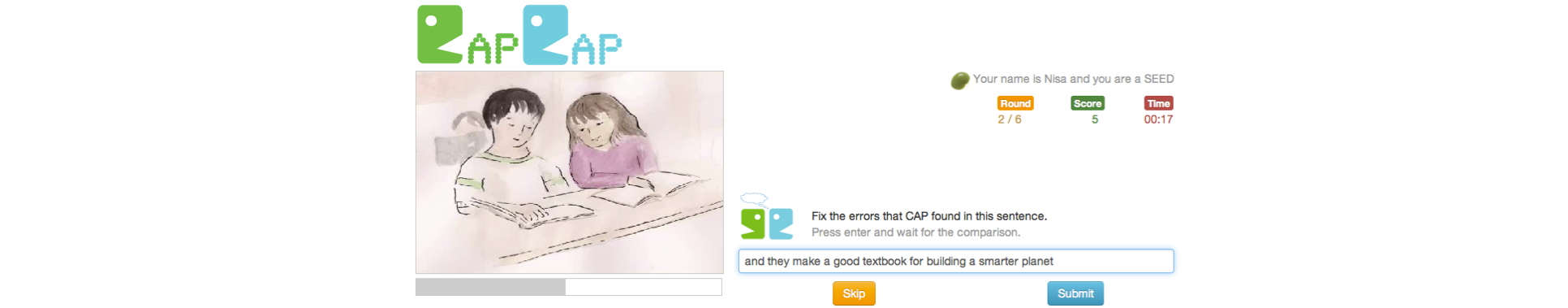

Video captions and audio transcriptions make audio content accessible for people who are deaf or hard-of-hearing, support indexing and summarization, and can help in vocabulary acquisition for second language learners. With an output-agreement game, called Cap-Cap, we demonstrate that a crowd of non-experts including non-native English speakers can improve automatic speech recognition error rates of English video captions through gamification. CapCap incorporates both text and speech input from the players with the prospect of collecting self-transcribed speech data.

6-dot Braille, linear in nature and limited to 64 characters, faces difficulties in keeping up with the vast number of mathematical structures and symbols in complex writing systems, thus posing additional cognitive load on blind people when accessing this information.

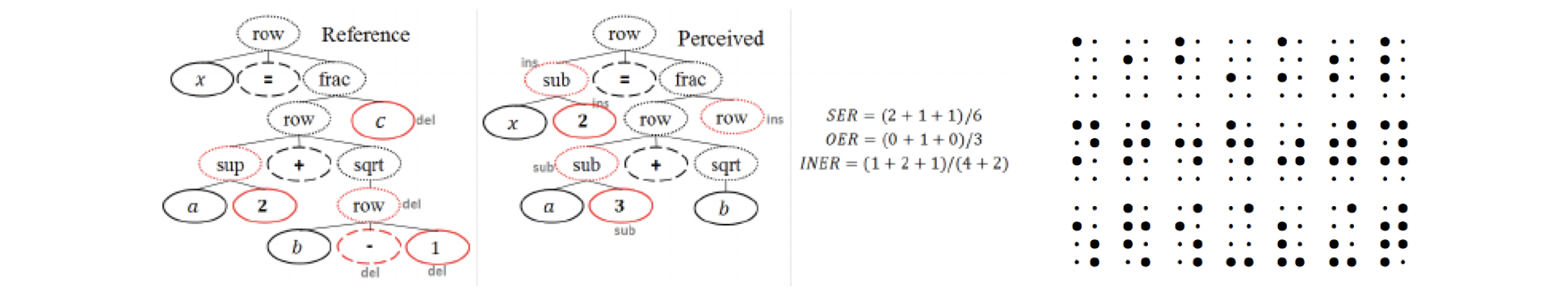

Math-to-Speech

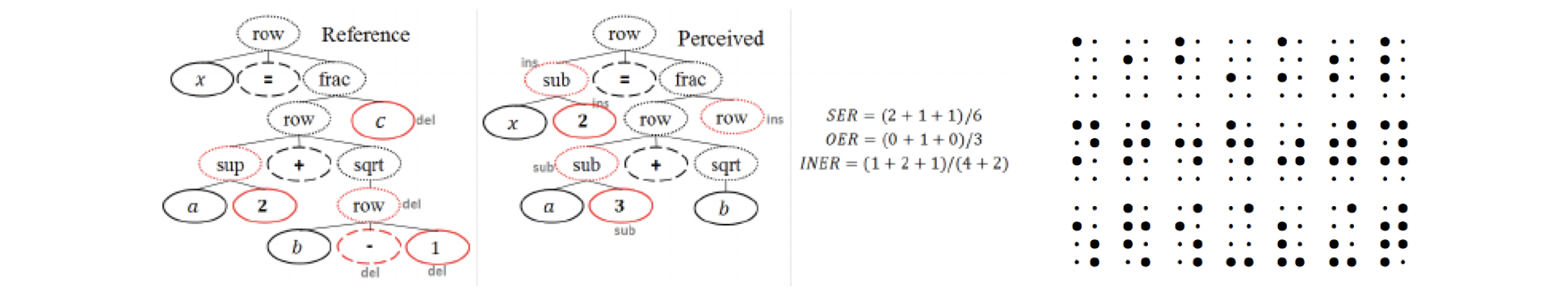

We adopt acoustic modality, which is often favored by blind people, to render mathematical expressions. Our Greek math-to-speech system is implemented in MathPlayer, a MathML to speech platform. Interested in replicable approaches to iteratively assess the performance of our system, we explore quantitative metrics and experimental methods that best estimate human perception of the rendered mathematical formulae. We introduce the first WER-like metrics for reporting math-to-speech perception as well as math recognition accuracies that allow comparison across systems. Our metrics are automatically calculated based on MathML tree edit distance.

8-dot Braille

We develop an 8-dot literary Braille code that covers both the modern and the ancient Greek orthography, including diphthongs, digits, and punctuation marks. Our method is language independent and achieves an compressed representation, retains connectivity with the 6-dot representation, preserves consistency on the transition rules, removes ambiguities, and considers future extensions.